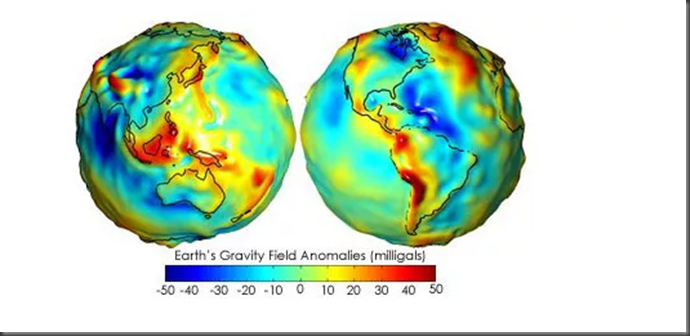

When doing surveys in the field, it’s critical to know the correct vertical elevation. But the earth has the shape of a lumpy potato, so it’s not easy to calculate the vertical elevation.

Traditionally the vertical elevation is calculated relative to a local system: the geoid. For the Netherlands the geoid ‘NAP height’ (https://www.rijkswaterstaat.nl/zakelijk/open-data/normaal-amsterdams-peil) is used – https://spatialreference.org/ref/epsg/5709/. The elevation is between minus 6.78 meter (Nieuwerkerk aan den IJssel) and 322.38 meter (Vaals).

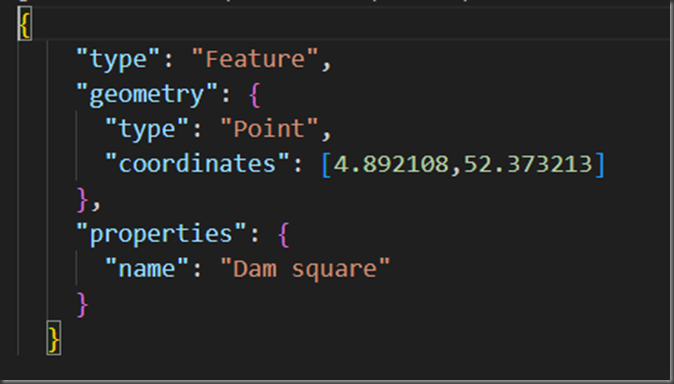

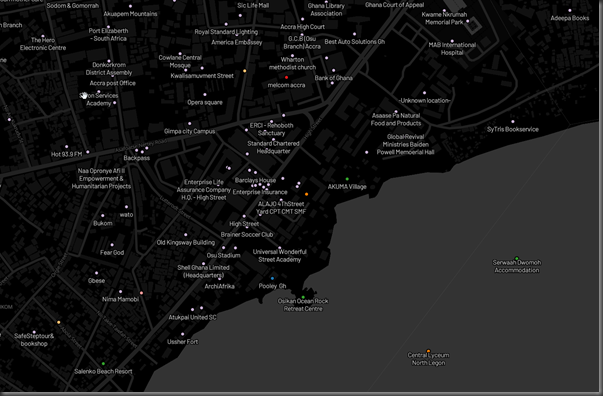

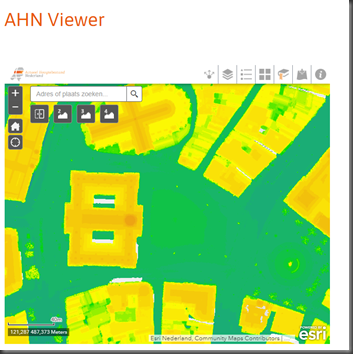

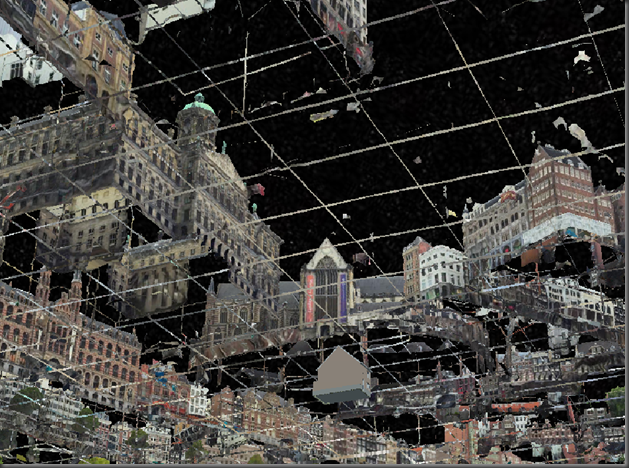

For example the Dam square in Amsterdam has about 2.6 meter elevation:

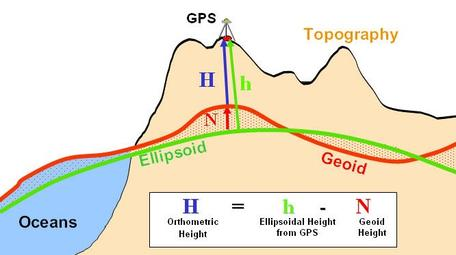

A more simple model (but less accurate) is to assume the earth has an ellipsoid shape. There is a difference between the ellipsoid and geoid, see:

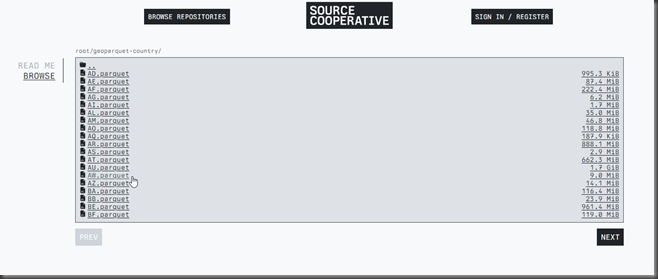

With tool ‘Coordinates Converter’ (https://tools.propelleraero.com/coordinates-converter) we can transform coordinates between geoid and ellipsoid, for the Dam square in Amsterdam:

So for Amsterdam Dam square: 2.68 meter relative to the geoid (NAP) gives 45.6 meter relative to the ellipsoid.

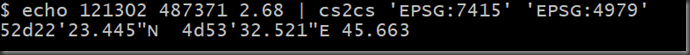

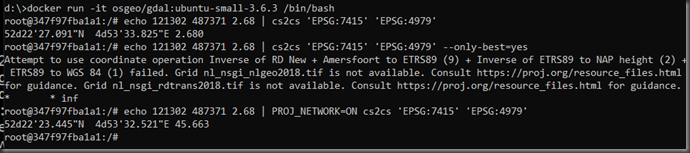

From the command line we can use GDAL/PROJ tooling to convert from geoid to ellipsoid using tool ‘cs2cs’. In QGIS it’s installed in the bin directory (for example c:\Program Files\QGIS 3.32.0\bin).

For example convert from Dutch horizontal coordinates (EPSG:28992 ) + vertical coordinates (EPSG:5709 – according to the geoid model) to the ellipsoid model:

Here we use a Dutch composite coordinate systems EPSG:7415 = EPSG:28992 (horizontal) + EPSG:5709 (vertical). I’ve collected some composite EPSG codes for other countries at https://github.com/bertt/epsg

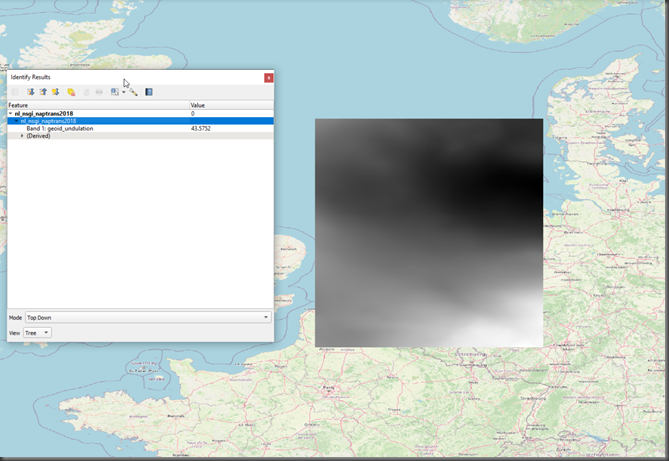

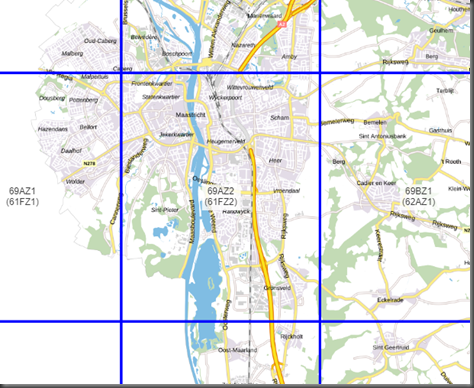

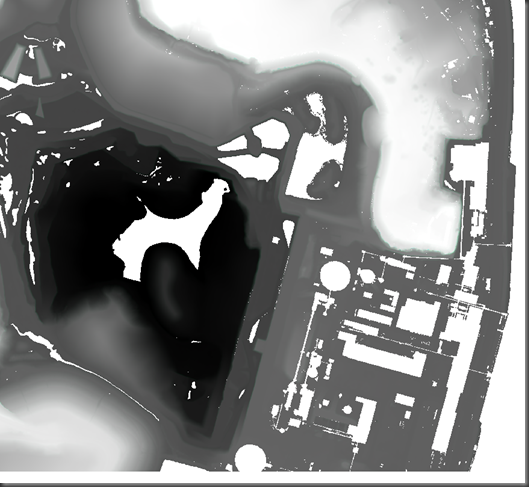

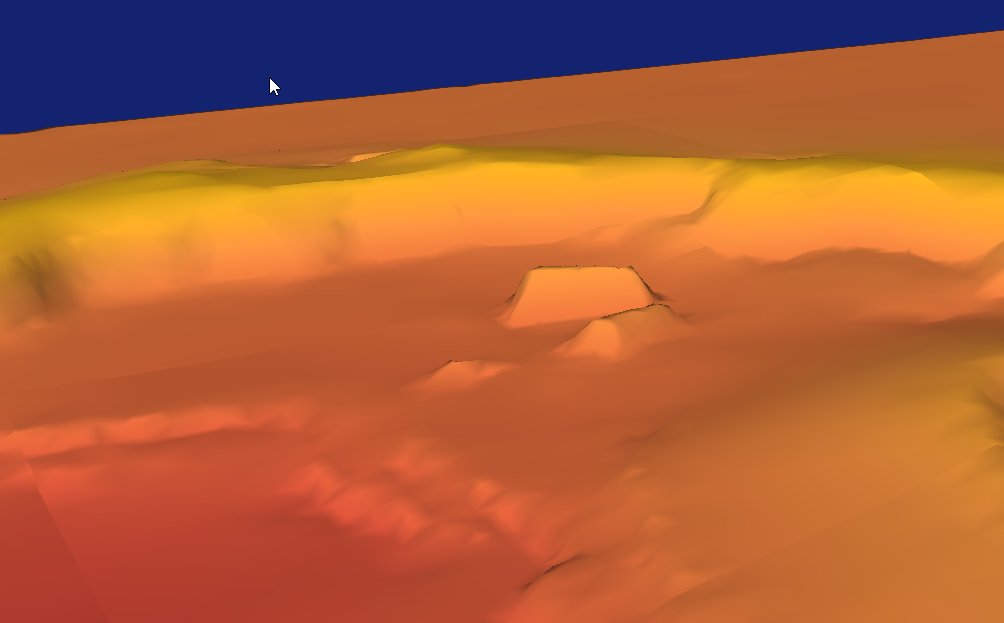

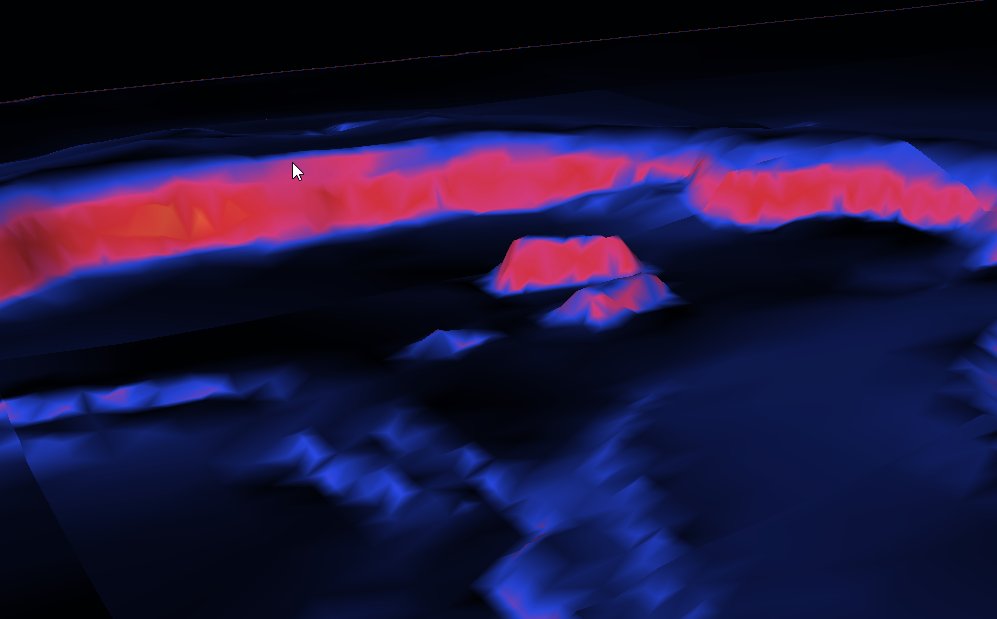

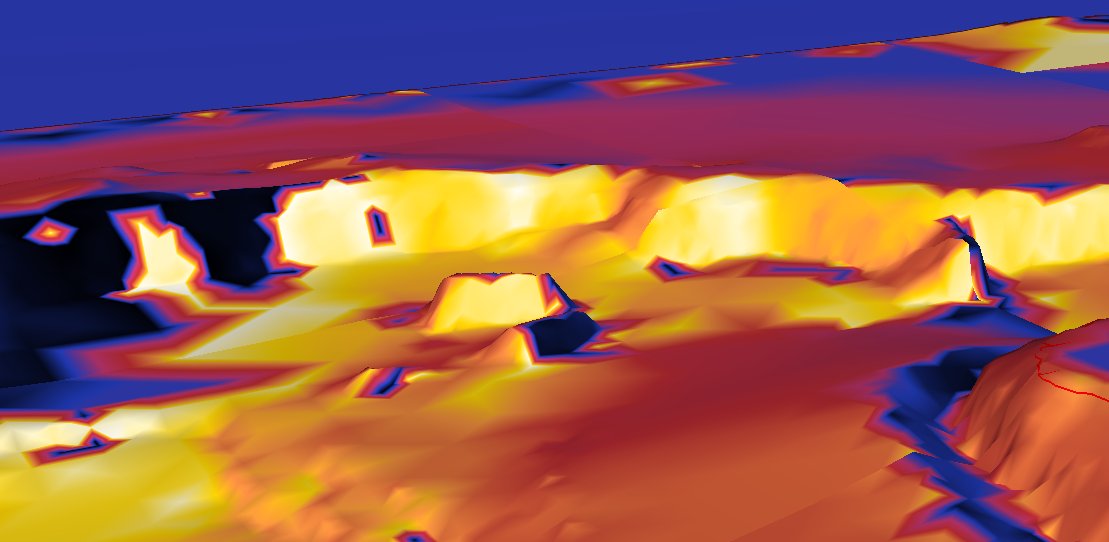

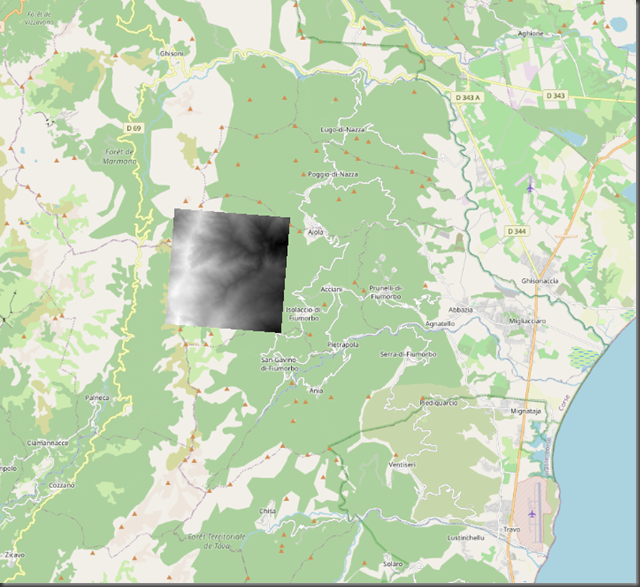

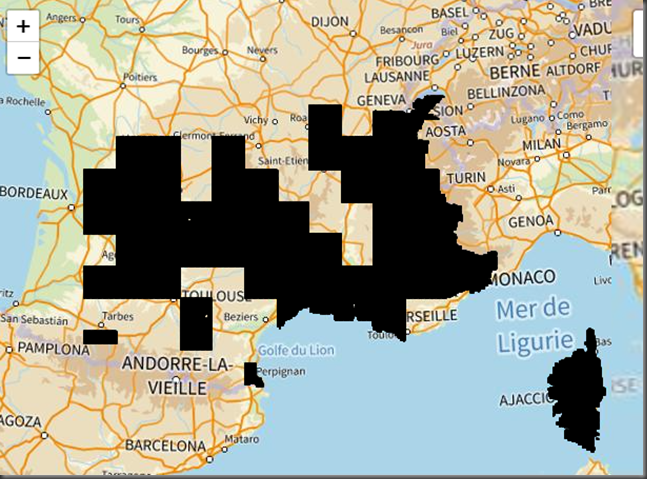

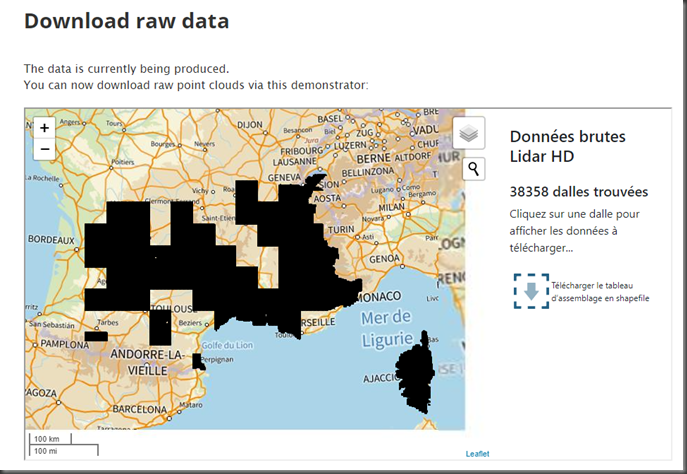

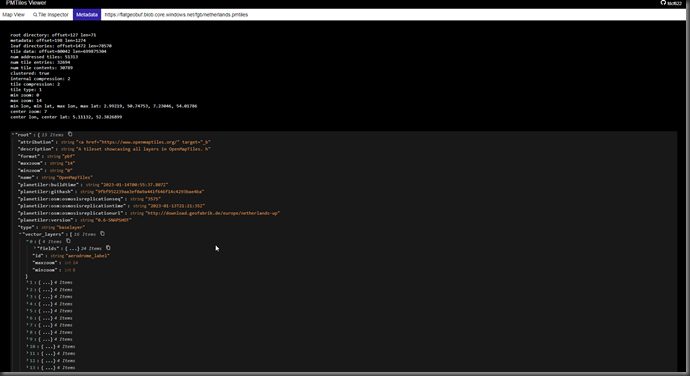

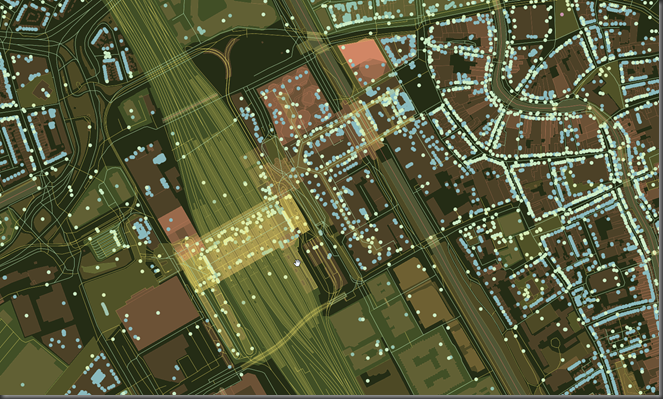

GDAL uses a set of TIF files to correctly transform the coordinates. IN QGIS they are installed in the ‘share/proj’ folder (for example c:\Program Files\QGIS 3.32.0\share\proj). For the Netherlands TIF file ‘nl_nsgi_naptrans2018.tif’ is used (download at https://cdn.proj.org/nl_nsgi_naptrans2018.tif). It looks like:

For the Netherlands it has values between 41 meter in Groningen and 47 meter in Limburg.

It can happen that the grids are not installed (to safe space). For example Docker image osgeo/gdal:ubuntu-small-3.6.3 does not have the grids (so we get 2.68 meter again when transforming):

When adding option ‘—only-best=yes’ there is a error message about the missing grid. When setting variable ‘PROJ_NETWORK=ON’ the grid is downloaded and the calculation goes well (result 45.663 meter). We can also run tool ‘projsync’ (https://proj.org/en/9.2/apps/projsync.html) to download all the grids at once (670 MB).

More about this topic see:

– Convert between Orthometric and Ellipsoidal Elevations using GDAL https://spatialthoughts.com/2019/10/26/convert-between-orthometric-and-ellipsoidal-elevations-using-gdal/

– When Proj Grid-Shifts Disappear http://blog.cleverelephant.ca/2023/02/proj-network.html